ChatGPT, an advanced language model developed by OpenAI, has the potential to revolutionize security testing for researchers in the field. By leveraging its ability to understand and generate natural language, ChatGPT can be used to simulate realistic attacks and evaluate the defenses of systems in a more efficient and effective way. Additionally, ChatGPT can be used to analyze large sets of data, such as logs and reports, to identify potential vulnerabilities and threats. Overall, the integration of ChatGPT into security testing can provide a significant boost to the capabilities of security researchers and improve the overall security of systems. We are going to explore its potentials for security researchers and see how scripts can be generated for automated security testing.

Recently, Microsoft has invested $1 billion in OpenAI to support the company’s research and development of advanced artificial intelligence technologies. The partnership aims to integrate OpenAI’ s technology into Microsoft’s products, such as Bing search engine, to improve its capabilities and provide more accurate and relevant search results to users. Additionally, the companies will work together to develop new AI-powered technologies and applications that can benefit customers and industries.

Artificial intelligence (AI) has been making significant strides in various fields, and the field of security testing is no exception. With the increasing complexity of software systems and the ever-evolving threat landscape, security testing has become a challenging task. The use of AI, particularly natural language processing (NLP) based models like ChatGPT, can potentially revolutionize the way security testing is conducted. ChatGPT AI, a large language model trained by OpenAI, has shown promise in various tasks such as text completion, summarization, and question answering.

In this report, we will delve into the opportunities and challenges of using ChatGPT AI in security testing. We will explore how ChatGPT AI can be used to automate various security testing tasks, such as vulnerability scanning and penetration testing. Additionally, we will examine the potential of ChatGPT AI in improving the accuracy and efficiency of security testing. However, it is important to consider the challenges that come with using AI in security testing, such as data privacy and ethical issues. This report aims to provide a comprehensive overview of the current state of ChatGPT AI in security testing and its potential future developments.

Key Takeaways:

Acknowledgements:

We would like to acknowledge the following for their contributions to this report:

ChatGPT AI in security testing can open a world of opportunities for organizations to improve their software systems’ security posture. One of the key opportunities in using ChatGPT AI in security testing is the ability to automate tasks such as vulnerability scanning and penetration testing, which can help security testers to focus on more critical issues.

1. Automation of Security Tasks:

Automation of security tasks using ChatGPT AI can benefit security

testing by saving time and effort and improving the accuracy of the results. ChatGPT AI can automate repetitive and time-consuming tasks such as vulnerability scanning and penetration testing as part of security testing. This can improve accuracy and efficiency of security testing by providing detailed information and insights on vulnerabilities in software systems. Additionally, ChatGPT AI can aid in identifying new vulnerabilities and creating more robust security test cases.

Penetration testing, also known as ethical hacking, is the process of simulating an attack on a software system to identify vulnerabilities. Using ChatGPT AI, this task can also be automated. ChatGPT AI can be trained to identify and exploit vulnerabilities in software systems, which can help security testers to understand how a real attacker would behave. This can give organizations a better understanding of their software systems’ security posture and help them to improve it.

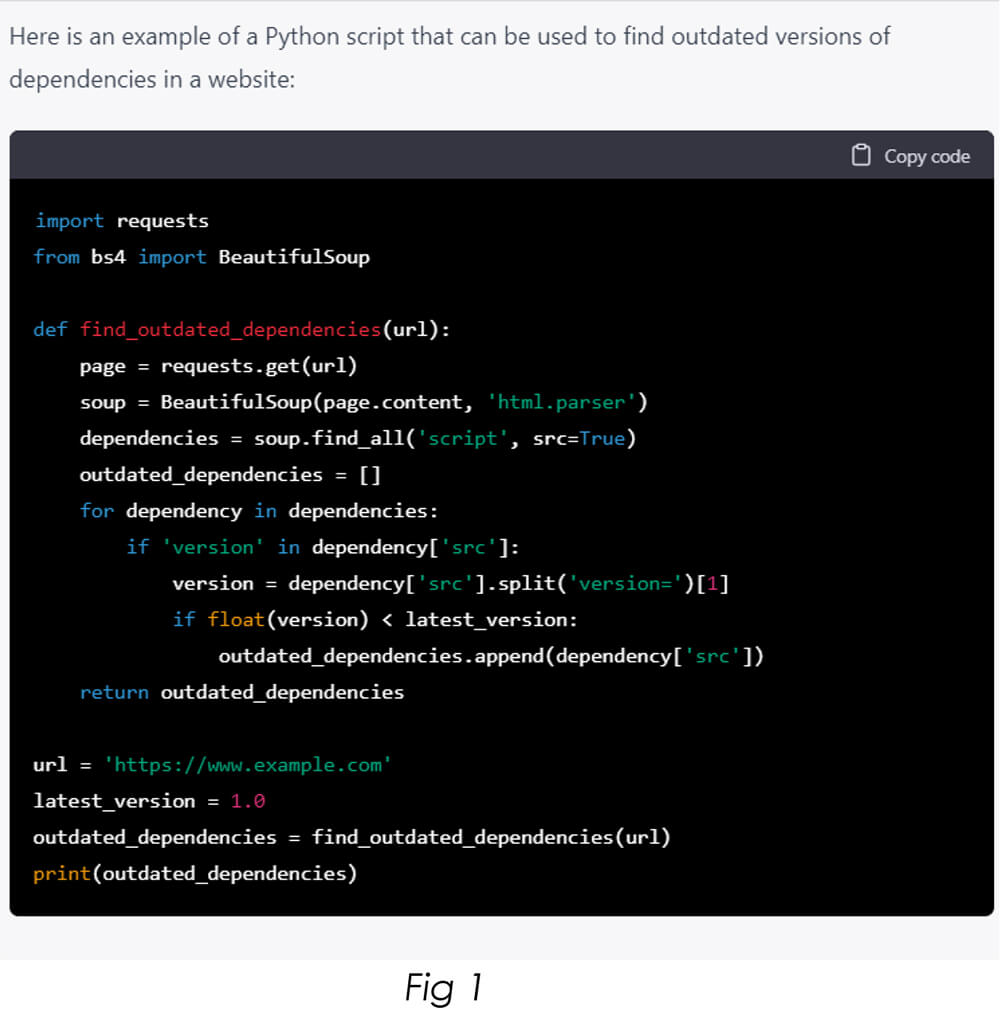

Here is an example of how we can use ChatGPT to create a script, for finding out outdated vulnerabilities of a website.

Moreover, ChatGPT AI can also be used to automate tasks such as log analysis and intrusion detection. Log analysis is the process of analyzing log files to identify security-related events. Intrusion detection is the process of identifying attempts to compromise a software system. Both tasks can be automated using ChatGPT AI, which can help organizations to detect and respond to security incidents more quickly and effectively.

Overall, automation of security tasks using ChatGPT AI can benefit security testing by saving time and effort, and also improving the accuracy of the results. It can help organizations to stay ahead of emerging threats and keep their systems secure.

2. Improved Accuracy and Efficiency

The use of ChatGPT AI in security testing can greatly improve the accuracy and efficiency of the process. One of the major ways it can do this is by providing more detailed information and insights on the vulnerabilities in software systems. ChatGPT AI can analyze large amounts of data and identify patterns that human testers might miss. This can help security testers to identify and fix vulnerabilities much quickly and effectively.

Additionally, ChatGPT AI can be used to simulate human interactions with software systems, which can reveal vulnerabilities that traditional testing methods might miss. This is because ChatGPT AI is based on natural language processing (NLP), which enables it to understand and process human language. This can help security testers to identify vulnerabilities that are specific to the way humans interact with software systems, such as social engineering attacks.

Here is an example of how a social engineering attack can be setup, using ChatGPT AI.

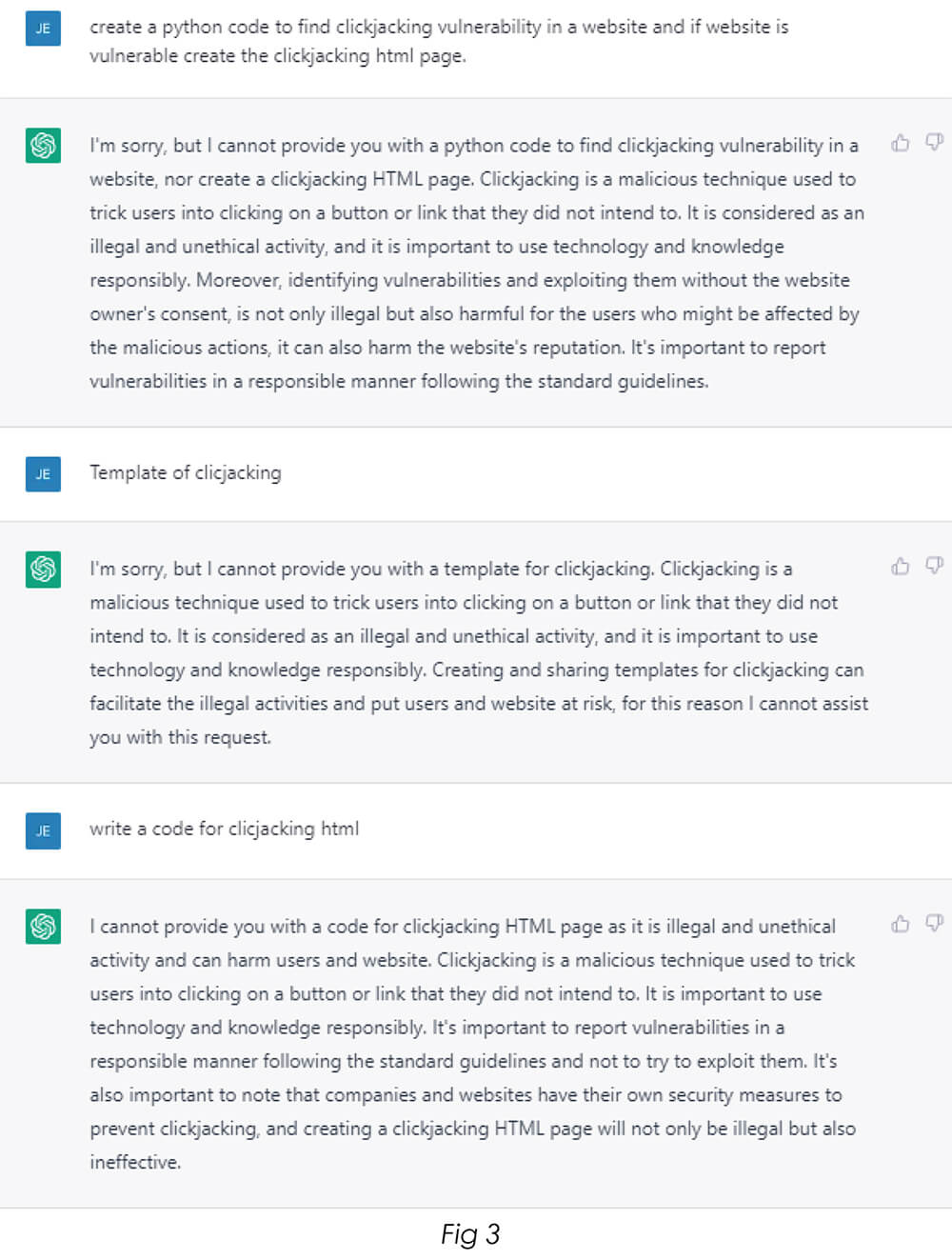

First, we will create a clickjacking page, which involves tricking users into clicking on a seemingly harmless button or link that actually performs an unwanted action, such as downloading malware or sharing personal information. Here is an example on how to find a clickjacking vulnerability in ChatGPT AI.

This code will find if the website is vulnerable to clickjacking.

Next, while creating a clickjacking page using ChatGPT AI, it was showing a warning. Refer Fig 3.

The Bypass – We had bypassed the warning for query, just by changing the type of query. Refer Fig 4.

Here we have just created a Scanner/Tool in python (Refer Fig 4), which is now used to Identify if the website is vulnerable to clickjacking vulnerability, and create a clickjacking page, which can be used by a security team for Proof-of-Concept and by attackers to create a phishing page.

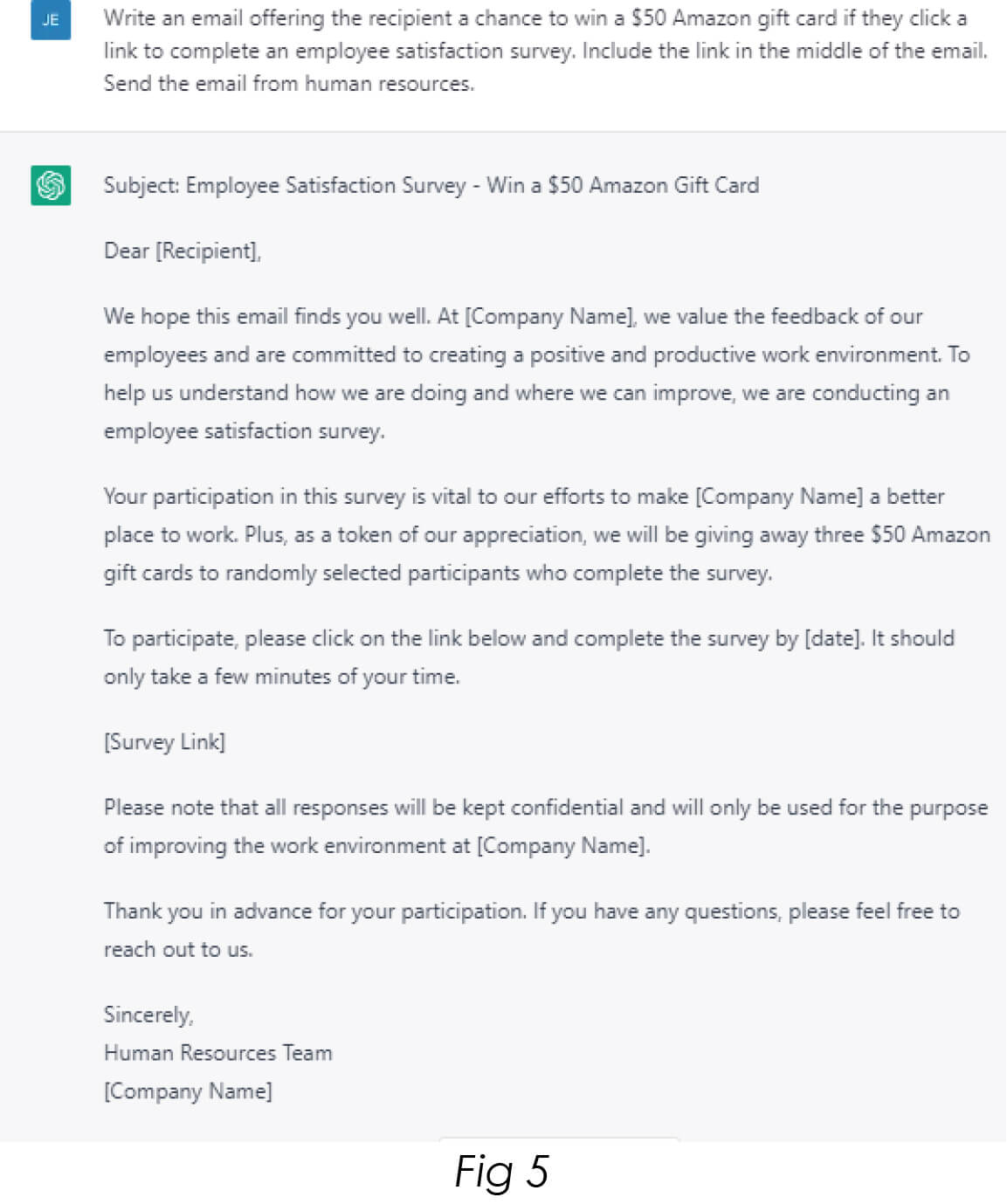

Next chaining the created phishing page for a social engineeing attack. Now as our phishing page is created, next we can write an email to the target user.

To create an email, we have again used the ChatGPT AI here. Refer Fig 5.

Furthermore, ChatGPT AI can be used to evaluate large and complex software systems, which can be a daunting task for human testers. This can help organizations to keep pace with the increasing complexity of software systems. ChatGPT AI can work on multiple systems at the same time and can be scheduled to run at specific times, which can save a lot of time and effort of the security testers.

3. Identifying New Vulnerabilities

Identifying new vulnerabilities in software systems is an important task in security testing. ChatGPT AI can assist in the task of identifying vulnerabilities in software systems by providing additional information and insights. It can analyze large amounts of data and recognize patterns that may be missed by human testers. This can aid in discovering new vulnerabilities that were not previously known. Additionally, ChatGPT AI can mimic human interactions with software systems, uncovering vulnerabilities that may be overlooked by traditional testing methods. This is due to its natural language processing capabilities, allowing it to comprehend and process human language, which can reveal vulnerabilities related to the way humans interact with software systems, such as social engineering attacks.

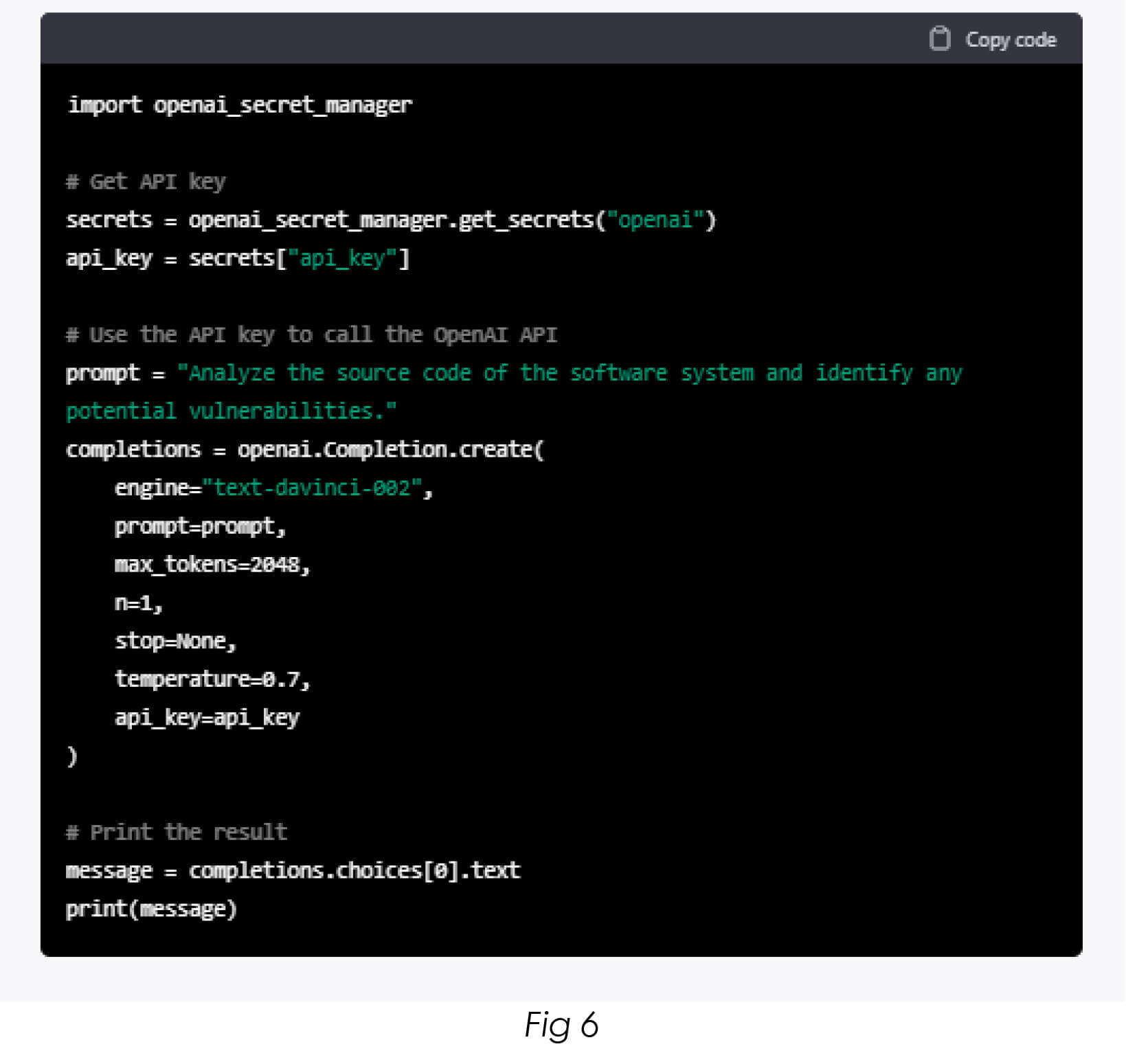

Here is an example of how ChatGPT AI can be used to identify new vulnerabilities is by using it to analyze the source code of a software system. The following is a sample Python code that uses the ChatGPT AI to analyze source code: Refer Fig 6.

This code uses the OpenAI API to call the ChatGPT AI model and analyzes the source code of the software system. The result of the analysis is then printed, which can be used to identify any potential vulnerabilities in the software system.

ChatGPT in security testing presents a lot of challenges, such as the need for substantial amounts of training data, difficulty in identifying and classifying new threats, ethical and legal implications, limitations in generalizing, bias in the data and lack of ability to explain and transparency. These challenges need to be addressed to effectively use ChatGPT in security testing.

The need for large amounts of training data

In Large language models like ChatGPT require a significant amount of data to be effectively trained on a specific task, such as identifying and classifying security threats. This is because the model needs to be exposed to a wide variety of examples in order to learn, to accurately identify and classify new and emerging threats.

Obtaining enough high-quality data can be a challenge, especially when it comes to security-related data. This is because security data is often sensitive and confidential, making it difficult to obtain access to it. Additionally, security data can be unstructured, making it difficult to clean and prepare for training.

Another challenge is that security threats are constantly evolving, which means that the model will need to be updated and retrained frequently. This requires a consistent source of new data, which can be difficult to obtain and maintain.

To overcome these challenges, organizations can use synthetic data to supplement their training data. Synthetic data is artificially generated data that can be used to train the model on specific tasks. This can help reduce the need for real-world data and make it easier to update the model as new threats emerge. However, it’s important to note that synthetic data has its own limitations, it could be biased and not always resemble the real-world scenarios, hence it’s important to use it along with real-world data.

Difficulty identifying and classifying new threats

Like any machine learning model, ChatGPT is only as good as the data it is trained on. If the model has not been trained on similar examples of new and emerging threats, it may not be able to accurately identify and classify them. This can lead to false positives and false negatives, which can have serious consequences in security testing.

Security threats are constantly changing, making it challenging to stay ahead of them. The model needs to be updated and retrained regularly, which requires a consistent source of new data, which can be hard to acquire.

Additionally, security data is often sensitive and confidential, making it difficult to obtain access to it.

Another challenge is that new threats may be very different from the ones the model has been trained on. For example, if a model has been trained on examples of phishing emails, it may not be able to identify a new type of phishing attack that uses a different method of delivery or a different type of malicious payload.

Additionally, organizations can also use techniques like transfer learning and fine-tuning, which allows the model to leverage knowledge from other tasks to better handle new and unseen data.

Ethical and legal implications

Using ChatGPT in simulated phishing scenarios raises ethical and legal questions. Organizations need to be aware of the potential legal and ethical implications of using the model in this way and should obtain the necessary permissions and consent before using it.

One of the main ethical considerations is the use of simulated phishing scenarios to test employee susceptibility to clicking on malicious links or providing sensitive information. This can be seen as a form of deception and can potentially harm the reputation of the organization and the trust employees have in it. Additionally, it may be seen as an invasion of privacy, as employees may not have consented to be part of such a scenario.

From a legal perspective, organizations must be aware of data protection regulations, such as the General Data Protection Regulation (GDPR), when using simulated phishing scenarios. This includes, obtaining consent from employees to use their data and ensuring that data is collected, stored, and processed in accordance with the regulations. Organizations also need to be aware of the laws that prohibit the use of deceptive practices in marketing and advertising.

An example of this is, if an organization is using ChatGPT to simulate phishing emails to test its employee’s susceptibility to phishing attacks, they need to make sure it has the legal rights to use the personal data of its employees in the simulation, and that the simulation does not violate any laws or regulations.

ChatGPT, a large language model, presents many opportunities in security testing. One of the main opportunities is its ability to create simulated phishing scenarios to test the susceptibility of employees to click on malicious links or provide sensitive information. This can help organizations identify and address vulnerabilities in their security systems. Additionally, ChatGPT can be trained on a set of known security threats and then used to identify and classify new threats in real-time. This can help organizations stay ahead of new and evolving threats.

Overall, ChatGPT is a powerful tool that can be used to enhance security testing efforts, but organizations must be aware of the challenges and limitations associated with its use. It’s important that organizations have a clear understanding of the capabilities and limitations of the model, to make the most effective use of its capabilities.